Advertisement

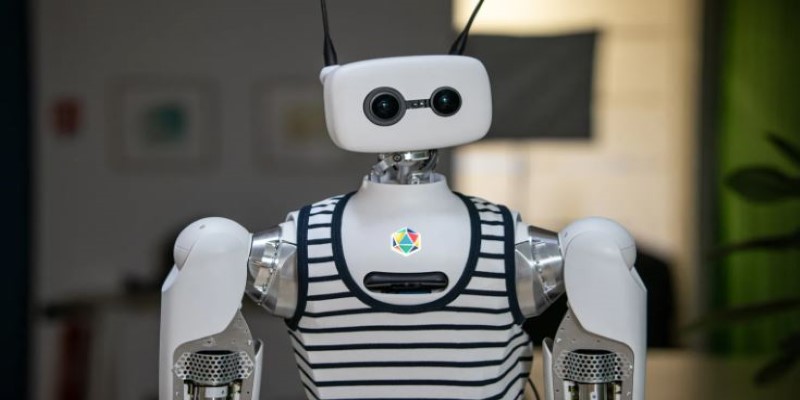

Robots have long been symbols of futuristic tech, but they’ve mostly remained out of reach—either locked behind patents or priced beyond practicality. That might be about to change. Hugging Face, best known for making advanced AI tools accessible through open-source, is stepping into robotics by acquiring Pollen Robotics, the team behind the open-source humanoid robot Reachy.

This isn’t a flashy pivot—it’s a natural extension of Hugging Face’s belief that AI should be shared, transparent, and usable by everyone. With this move, they’re taking machine learning off the screen and giving it arms, eyes, and a place in the physical world.

The acquisition of Pollen Robotics wasn't a random leap. Hugging Face has long promoted an open approach to artificial intelligence, allowing developers to freely use and contribute to tools. Pollen Robotics shared that vision. Its robot, Reachy, was designed to be open from the start. Built with modular components and programmable using Python, Reachy was built for researchers, educators, and developers who wanted more control and transparency.

Hugging Face saw in Pollen a way to extend its mission into the physical world. AI is no longer just software that processes text or images. More often, it’s being connected to sensors, cameras, motors, and physical environments. Hugging Face recognized that the next step in making AI more accessible was making it interact with the world around us. Owning the hardware meant they could shape how this happens.

The sale also gives Hugging Face a head start in a space dominated by proprietary systems. Most robots today are either closed systems or require expensive licensing to use. Hugging Face's brand has always leaned toward democratizing access, and this move into robotics continues that approach.

Open-source robotics is still a niche within a niche. While open-source software has become widely accepted in many industries, hardware lags. Building physical devices is expensive and complicated; few companies want to share their designs freely. Pollen Robotics was one of the few exceptions, and now Hugging Face is taking that philosophy forward with more resources.

This move brings attention and credibility to the open-source robotics community. Hugging Face has the scale and reputation to introduce open robotics to more developers. It's also one of the few AI companies that has consistently shown interest in keeping their projects transparent and community-driven. With its involvement, people hesitant to explore open hardware might now reconsider.

Developers working on AI models can test their work in real-world settings. Educators will have more practical tools to teach robotics. Startups and researchers who can't afford closed, commercial robots may now have a solid, flexible platform from which to work. This expansion into physical AI systems makes the entire ecosystem more dynamic.

Reachy isn’t just a robot—it's an entire platform. Originally released in 2020, it's a humanoid robot equipped with a modular head, torso, and robotic arms. Reach stands out because it's not locked behind licenses or built with obscure systems. Developers can code with Python and freely access its 3D-printed parts and mechanical documentation.

Under Hugging Face, Reachy is expected to get more robust community support and integrations with popular AI frameworks. That includes tools like Transformers and Diffusers, which are already widely used for language and vision tasks. The goal is to make a robot that works seamlessly with the models developers are already using. It could recognize speech, hold simple conversations, or perform tasks based on visual input—using open models anyone can inspect and improve.

The hardware will likely evolve, too. Hugging Face has not announced specifics, but with its resources, future versions of Reachy could become more affordable and scalable. That would make it more accessible to schools, research labs, and small businesses.

Hugging Face's growing community could also contribute new modules, features, or extensions. Just as they've done with models and datasets, developers may be able to share robotics tools or training environments, speeding up innovation without starting from scratch.

The merger of AI and robotics has been on the horizon for a while. Still, it often happens in isolated pockets—large labs, expensive R&D departments, or startups with limited public access. Hugging Face is trying to change that. By acquiring Pollen Robotics, the company wants to make it easier for anyone to build intelligent machines without hitting technical or financial roadblocks.

This move also reflects a shift in the industry. AI companies aren’t just making models anymore—they're thinking about where those models will run and what real-world problems they can solve. Hugging Face entering hardware means it wants to support that entire pipeline, from software development to physical deployment.

It's too early to say exactly how the robotics product line will grow, but the foundation is clear. The company will apply its open, community-led model to robotics just as it has with machine learning tools. That means better access, documentation, and a feedback loop between users and developers, improving the platform over time.

This could change how robots are used in research, education, customer service, and even small-scale automation. Instead of relying on hard-to-modify or understand black-box systems, developers will have access to machines they can tweak, rebuild, and study. That openness helps avoid the kind of bottlenecks that have slowed innovation in the past.

At a time when more people are thinking about the ethical and social implications of AI, Hugging Face’s push for transparency feels timely. It reinforces the idea that progress in AI doesn’t have to come with secrecy or exclusivity.

Hugging Face's acquisition of Pollen Robotics marks a thoughtful shift toward hands-on AI development. By combining open-source software with accessible hardware, it invites a wider range of people to experiment, build, and learn. This step makes robotics less exclusive and more collaborative, offering a clear path for developers and educators to explore intelligent machines practically. It's a grounded move with far-reaching potential in real-world AI use.

Advertisement

What makes BigCodeBench stand out from HumanEval? Explore how this new coding benchmark challenges models with complex, real-world tasks and modern evaluation

How the open-source BI tool Metabase helps teams simplify data analysis and reporting through easy data visualization and analytics—without needing technical skills

Policymakers analyze AI competition between the U.S. and China following DeepSeek’s significant breakthroughs.

Need to deploy a 405B-parameter Llama on Vertex AI? Follow these steps for a smooth deployment on Google Cloud

How to use Librosa for handling audio files with practical steps in loading, visualizing, and extracting features from audio data. Ideal for speech and music and audio analysis projects using Python

Samsung launches world’s smartest AI phone with the new Galaxy S24 series, bringing real-time translation, smart photography, and on-device AI that adapts to your daily routine

Discover OpenAI's key features, benefits, applications, and use cases for businesses to boost productivity and innovation.

Looking for the best cloud GPU providers for 2025? Compare pricing, hardware, and ease of use from trusted names in GPU cloud services

How Phi-2 is changing the landscape of language models with compact brilliance, offering high performance without large-scale infrastructure or excessive parameter counts

Hugging Face and FriendliAI have partnered to streamline model deployment on the Hub, making it faster and easier to bring AI models into production with minimal setup

IBM AI agents boost efficiency and customer service by automating tasks and delivering fast, accurate support.

Thousands have been tricked by a fake ChatGPT Windows client that spreads malware. Learn how these scams work, how to stay safe, and why there’s no official desktop version from OpenAI